Next Leap of AI Infrastructure is Here

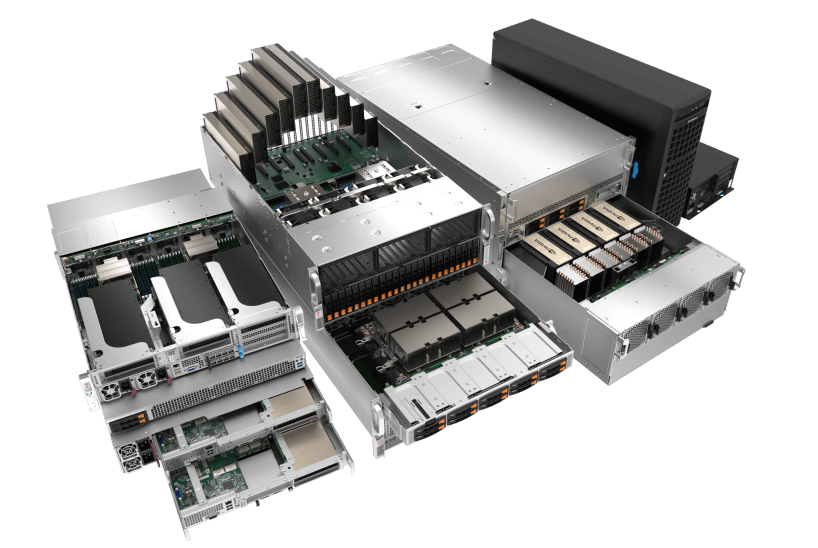

Today’s advanced AI models are changing our lives rapidly. Accelerated compute infrastructure is evolving at unprecedented speed in all market segments. Flexible, robust, and massively-scalable infrastructure with next-generation GPUs is enabling a new chapter of AI.

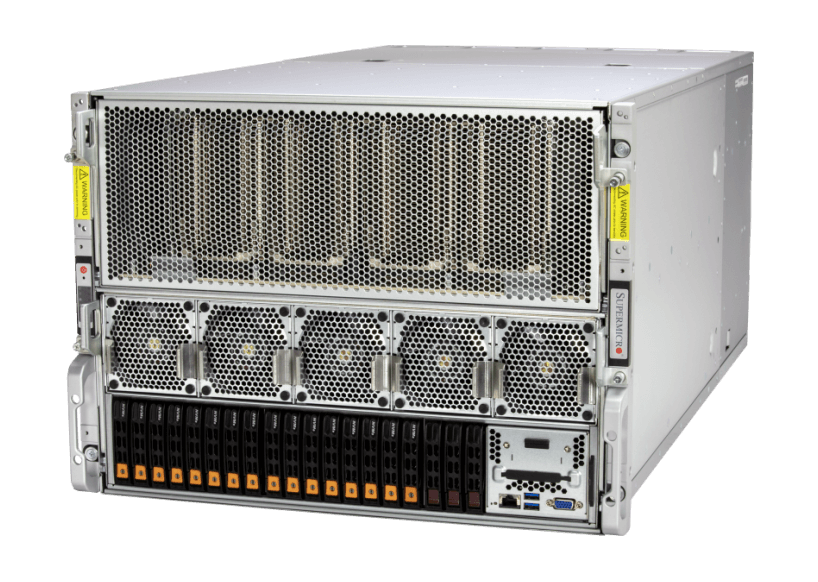

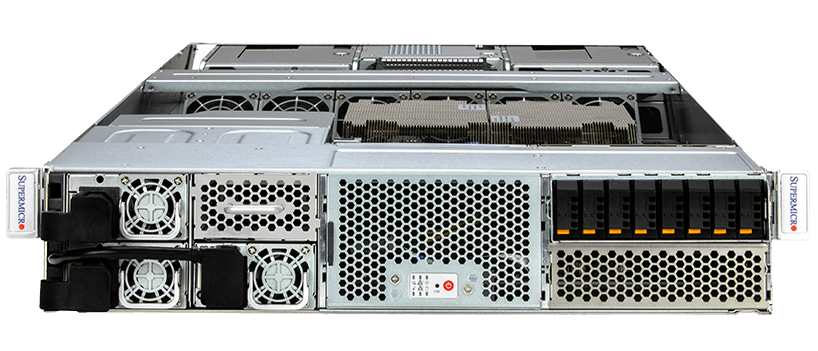

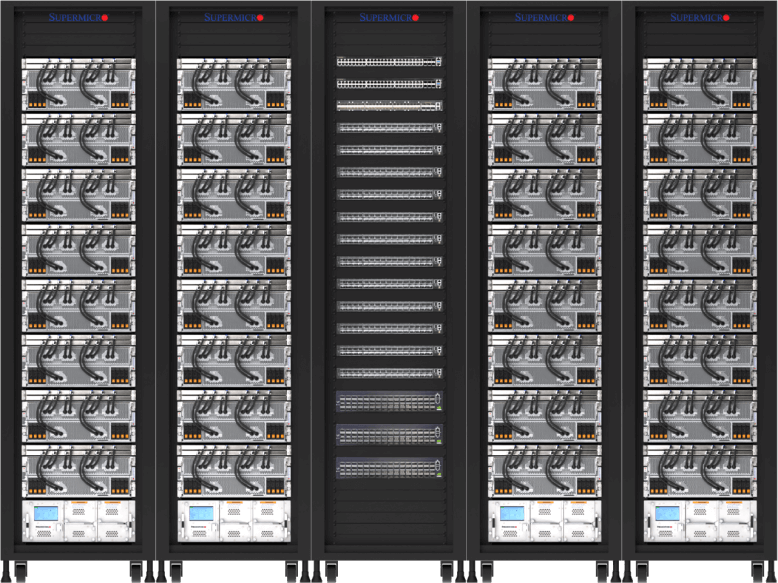

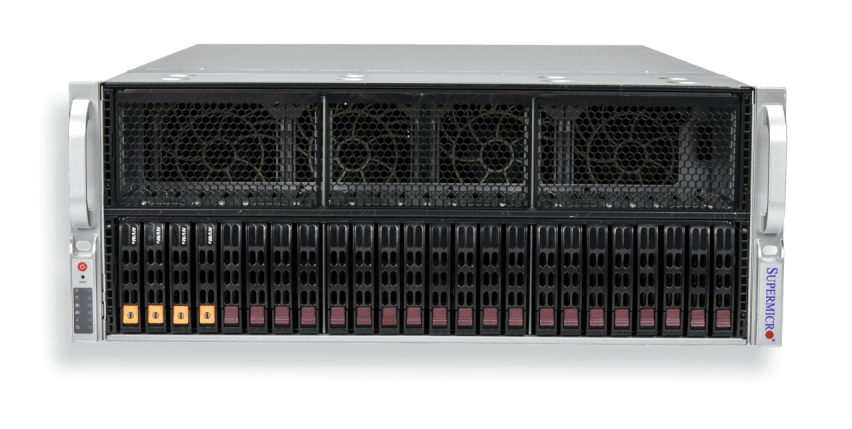

In close partnership with NVIDIA, Supermicro delivers one of the broadest selections of NVIDIA-Certified systems providing the most performance and efficiency from small enterprises to massive, unified AI training clusters with the new NVIDIA H100 and H200 Tensor Core GPUs.

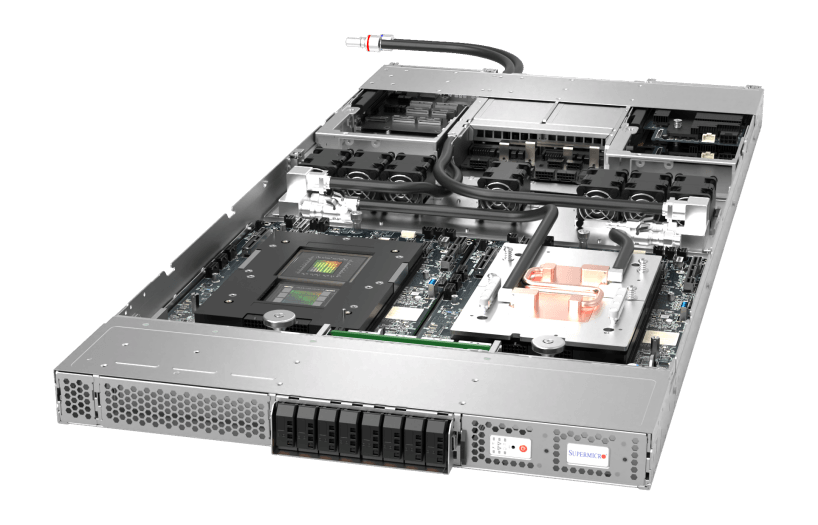

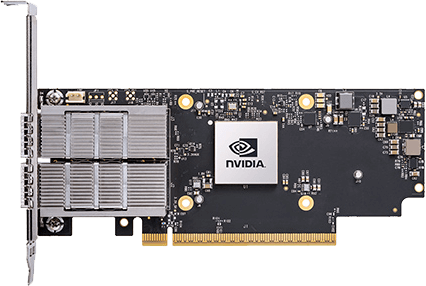

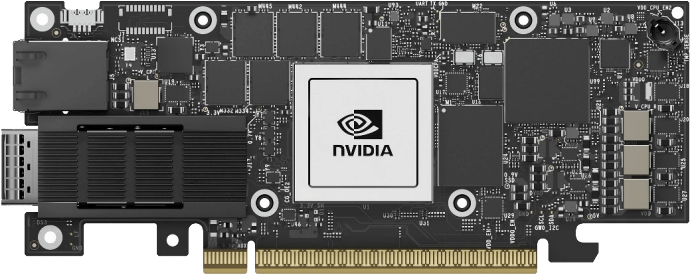

Together, we achieve up to nine times the training performance of the previous generation for some of the most challenging AI models, cutting a week of training time into just 20 hours. Supermicro systems with the H100 PCIe, HGX H100 GPUs, as well as the newly announced HGX H200 GPUs, bring PCIe 5.0 connectivity, fourth-generation NVLink and NVLink Network for scale-out, and the new NVIDIA ConnectX®-7 and BlueField®-3 cards empowering GPUDirect RDMA and Storage with NVIDIA Magnum IO and NVIDIA AI Enterprise software.