クラウドスケールのAIトレーニングと推論

高性能なAI/ディープラーニング(DL)トレーニング用コンピュートに対する需要は、2013年以降、3.5カ月ごとに倍増しており(OpenAI調べ)、データセットのサイズの増大や、大規模言語モデル(LLM)、コンピュータビジョン、レコメンデーションシステムなどに基づくアプリケーションやサービスの増加に伴い、加速しています。

トレーニングおよび推論の性能、スループット、容量に対する需要の高まりに伴い、業界では効率性の向上、コスト削減、導入の容易さ、カスタマイズを可能にする柔軟性、AIシステムのスケーラビリティを提供する専用設計システムが必要とされています。AIは、コパイロット、バーチャルアシスタント、製造自動化、自動運転車両の運用、医療画像診断など、多様な分野において不可欠な技術となっています。Supermicro インテルとSupermicro 、インテル Gaudi AI アクセラレータを搭載したクラウド規模のシステムおよびラック設計を提供しております。

新型Supermicro Gaudi® 3 AIトレーニングおよび推論プラットフォーム

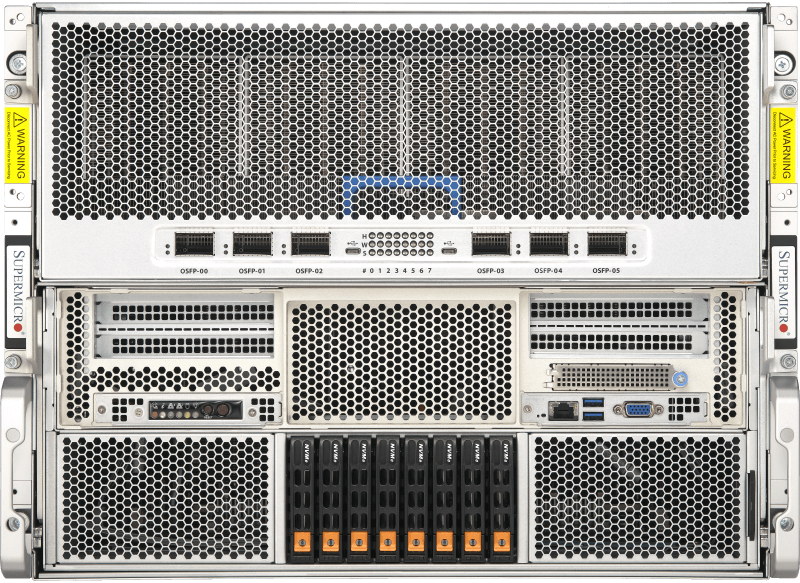

エンタープライズAI市場に新たな選択肢をもたらす、Supermicro AIトレーニングプラットフォームは、第3世代Intel® Gaudi 3アクセラレータを基盤として構築されております。大規模AIモデルトレーニングおよびAI推論の効率性をさらに向上させるよう設計されております。空冷式と水冷式の両構成でSupermicro Gaudi 3Supermicro、幅広いAIワークロード要件に対応するため、容易に拡張が可能です。

- GPUOAM 2.0ベースボード上の8つのGaudi 3 HL-325L(空冷)またはHL-335(液冷)アクセラレータ

- CPUデュアル インテル® Xeon® 6 プロセッサー

- メモリ:24 DIMM - 1DPCで最大6TBメモリ

- ドライブ最大8台のホットスワップPCIe 5.0 NVMe

- 電源8 3000W 高効率完全冗長化 (4+4) チタニウムレベル

- ネットワーキング: 6個のオンボードOSFP 800GbEポート(スケールアウト用

- 拡張スロット: 2 PCIe 5.0 x16 (FHHL) + 2 PCIe 5.0 x8 (FHHL)

- ワークロードAIのトレーニングと推論

Supermicro AIトレーニングサーバー

Supermicro AIトレーニングシステムの成功を基盤とし、Gaudi 2 AIサーバーは二つの重要な要素を優先しております。第一に、AIアクセラレータと内蔵の高速ネットワークモジュールを統合し、最先端AIモデルのトレーニングにおける運用効率を推進すること。第二に、AI業界が必要とする選択肢を提供することです。

- GPU8枚のGaudi2 HL-225Hメザニンカード

- CPUデュアル第3世代インテル® Xeon® スケーラブル・プロセッサー

- メモリ32 DIMM - 最大8TB登録ECC DDR4-3200MHz SDRAM

- ドライブ:最大24台のホットスワップドライブ(SATA/NVMe/SAS)

- 電源: 6x 3000W 高効率(54V+12V)完全冗長電源

- ネットワーキング: 6個のQSFP-DDによる24 x 100GbE (48 x 56Gb) PAM4 SerDesリンク

- 拡張スロット:2x PCIe 4.0スイッチ

- ワークロードAIのトレーニングと推論

インテル® Gaudi® 3を搭載Supermicro 、実世界のAIシナリオ向けに最適化されております。

インテル® Xeon® 6 シリーズ プロセッサー(P-コア搭載)およびインテル Gaudi 3 アクセラレータを搭載したSupermicro 、前世代モデルと比較して性能向上が確認されました。

Supermicro Intel® Gaudi® AIアクセラレータクラスタ リファレンスデザイン

オープンソースソフトウェアと業界標準イーサネットを基盤とし、Supermicro Intel® Gaudi® 3アクセラレータ搭載システムにより、AIソリューションのコスト削減と高速化を実現します。

Supermicro 3 AISupermicro 、AI要件に対応するスケーラブルな性能を提供いたします。

あらゆる規模とワークロードのデータセンターに最適化された幅広いソリューション 新サービスと顧客満足度の向上を実現

Supermicro 3システムが、エンタープライズAIインフラを推進します

IntelXeon 6プロセッサーを使用した高帯域幅AIシステムにより、エンタープライズ規模での効率的なLLMとGenAIのトレーニングと推論を実現

Supermicro Hyper 、VMWAREプラットフォーム上でエンタープライズAIワークロードをHyper

計算AIワークロードのユースケース:大規模言語モデル(LLM)とAI画像認識 - インテル® Data Center Flex 170 GPU上のResNet50

インテル® 開発者クラウドにおけるSupermicro によるAIコンピューティングの高速化

インテル® Xeon® プロセッサーおよびインテル® Gaudi® 2 AIアクセラレータを搭載したSupermicro 、開発者および企業向けに高性能かつ高効率なAIクラウドコンピューティング、トレーニング、推論を実現します。

Supermicro とインテル® データセンターGPU Flexシリーズを基盤とした優れたメディア処理および配信ソリューション

インテル® データセンター GPU Flex シリーズ搭載のSupermicro

Supermicro :インテル データセンター GPU Flex シリーズを基盤とした新たなメディア処理ソリューション

当社の製品エキスパートが、このたび発表されたインテル データセンター GPU Flex シリーズを基盤とした新たなSupermicro についてご説明いたします。これらのソリューションが、皆様および御社にどのようなメリットをもたらすか、ぜひご覧ください。

スケーラブルなクラウドゲーミングの実現

インテル® データセンター GPU Flex シリーズ搭載のSupermicro

Supermicro 、クラウドサービスプロバイダーが環境に優しく、コスト効率に優れ、収益性の高いクラウドゲーミングインフラを構築するための、あらゆるシステムコンポーネントSupermicro 。

クラウドゲーム、メディア、トランスコーディング、AI推論のための革新的なソリューション

2022年09月08日 10時00分PDT

Supermicro 専門家が、非公式セッションにて、クラウドゲーミング、メディア配信、トランスコーディング、AI推論の各分野におけるソリューションの利点について、新たに発表されたインテル Flex シリーズ GPU を用いてご説明いたします。本ウェビナーでは、Supermicro 優位性、最適なサーバー構成、ならびにインテル Flex シリーズ GPU 採用のメリットについて解説いたします。

Supermicro ハバナ®による高性能・高効率AIトレーニングシステム

ディープラーニングのトレーニングにおいて、従来のAIソリューションよりも最大40%優れた価格/パフォーマンスを実現