インスティンクト™ MI350シリーズ/MI325X

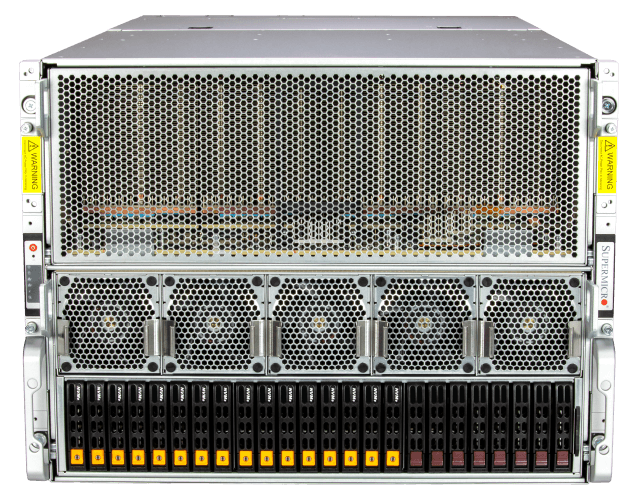

Supermicro 、実績あるAIビルディングブロックシステムを採用し、第5AMD プロセッサーAMD MI350シリーズGPUを搭載したサーバーにより、大規模インフラの真の力をSupermicro 。 業界標準ベースのユニバーサルベースボード(UBB 2.0)モデルを採用し、8AMD MI350シリーズアクセラレータと2TBを超えるHBM3eメモリを搭載。これにより、最も要求の厳しいAIモデルの処理を支援します。

リソース

MI325X 空冷および液冷システム

- MI355X

空冷 - MI350X、

MI325X

空冷式 - MI355X、

MI325X

液冷式

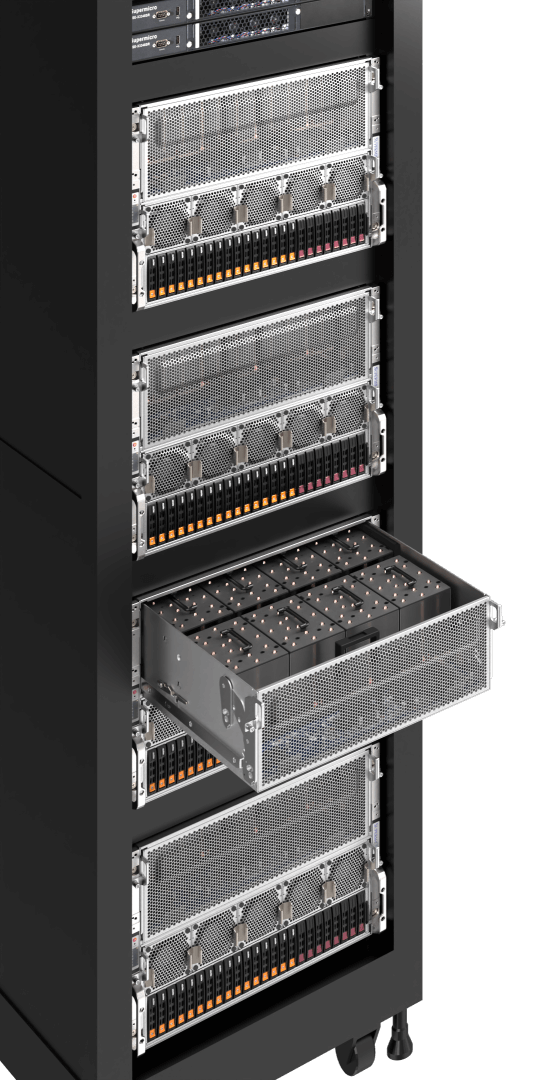

AMD Instinct8-GPU搭載DPAMD 10Uシステム

AMD Instinct8-GPU搭載DPAMD 8Uシステム

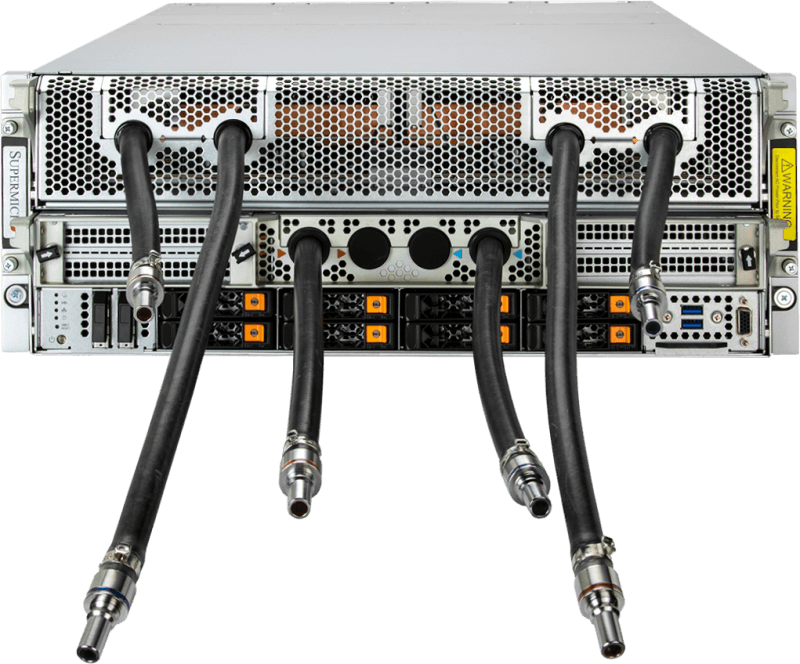

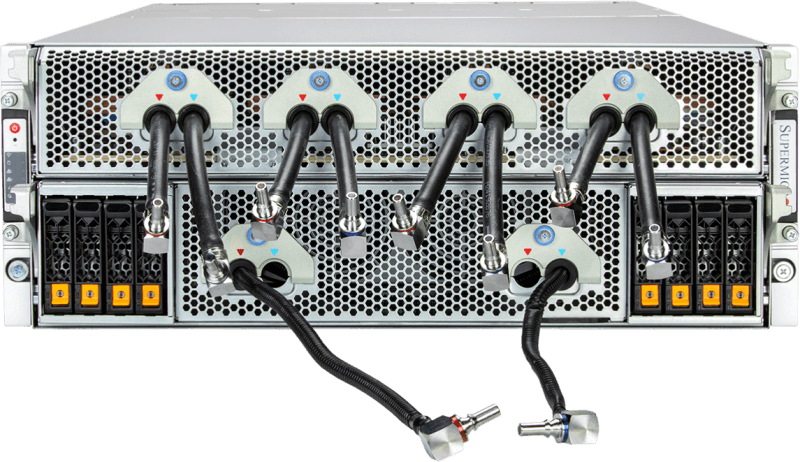

AMD Instinct8-GPUと液冷を搭載したDPAMD 4Uシステム

インスティンクト™ MI300X/A

MI300X 8-GPUシステムは、AMD Infinity Fabric™Linksを搭載しており、1台のサーバー・ノードで業界トップクラスの1.5 TBHBM3 GPUメモリーを備えたオープンスタンダード・プラットフォーム上で、最大896 GB/秒の理論ピークP2P I/O帯域幅を可能にします。

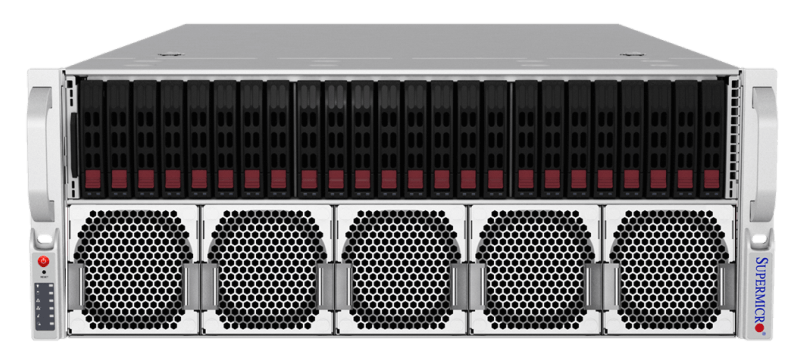

データセンター向けに最適化された、HPCおよびAI推論向けマルチプロセッサシステム。Supermicro空冷式4Uおよび水冷式2UクワッドAPUシステムSupermicro、CPUとGPUを統合AMD MI300Aをサポートし、Supermicroマルチプロセッサシステムアーキテクチャと冷却設計における専門知識を活用しています。これらは、AIとHPCの融合に対応するために精密に調整されています。

リソース

MI300X/MI300A 空冷および液冷システム

- MI300X

空冷 - MI300X

水冷 - MI300A

空冷 - MI300A

液冷式

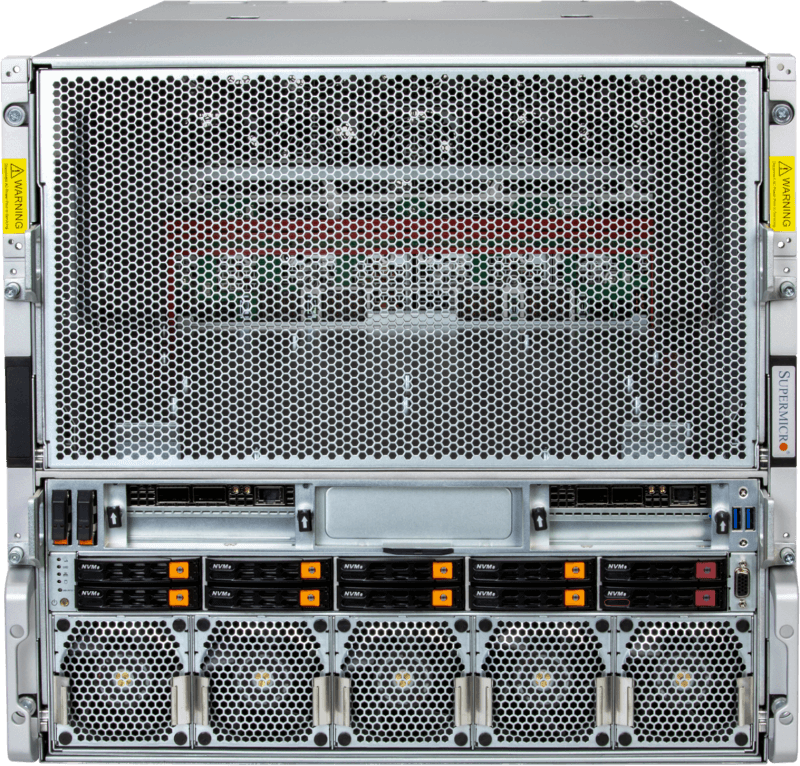

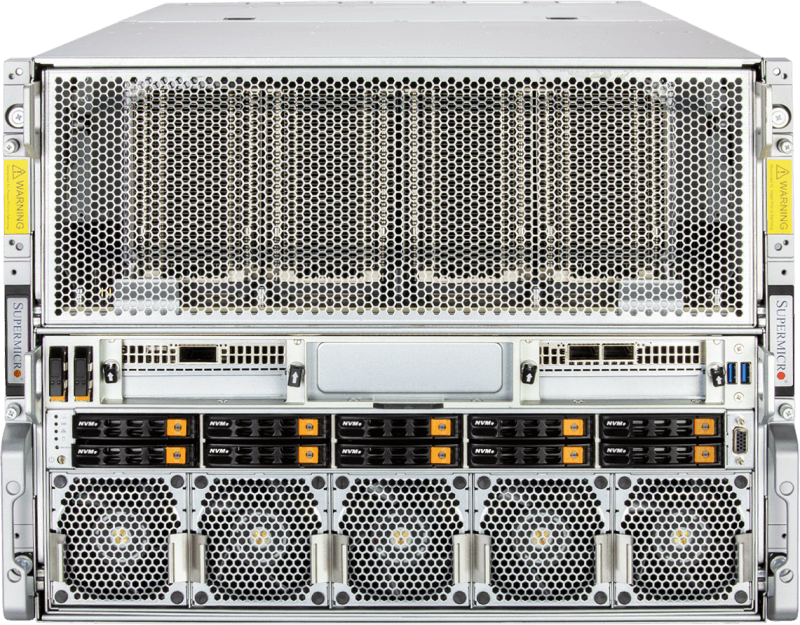

CPU間インターコネクトを備えたDP 8U4-GPUOAMアーキテクチャ

液冷ソリューションを備えたDP 4U4-GPUOAMアーキテクチャ

AMD Infinity Fabric™ Link搭載DP 4U4-APUアーキテクチャ

液冷ソリューションを備えたDP 2U4-APUアーキテクチャ

リソース