Förderung des Fortschritts im Bereich KI und HPC

Proven to Perform at Scale

Supermicro unleashes three new GPU systems powered by AMD Instinct™ MI300 series accelerators to advance large-scale AI and HPC infrastructure. Built on Supermicro’s proven AI building-block system architecture, the new 8U 8-GPU system with MI300X accelerators streamlines deployment at scale for the largest AI models and reduces lead time. In addition, Supermicro’s 4U and liquid-cooled 2U 4-Way systems supporting MI300A APUs, which combine CPUs and GPUs, leverage Supermicro’s expertise in multiprocessor system architecture and cooling design, finely tuned to tackle the convergence of AI and HPC.

Industry-Proven System Designs

8U high-performance fabric 8-GPU system leveraging industry-standard OCP Accelerator Module (OAM) to support AMD’s MI300X Accelerators. 4U and 2U multi-processor APU systems integrate 4 AMD Instinct™ MI300A accelerators.Purpose Built for AI & HPC

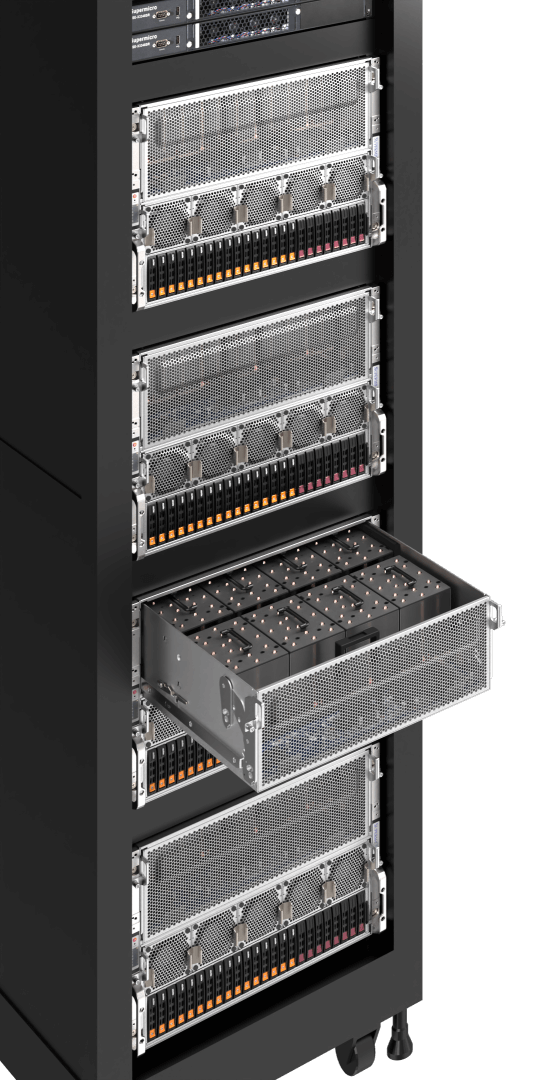

Feature maximized and power optimized, supporting up to 16x dedicated hot-swap NVMe drives, full-performance GPUs, CPUs, and memory, and high-speed networking for large-scale cluster deployments.Advanced Cooling Options

Flexible cooling options for air- and liquid-cooled racks, with liquid-cooled solutions delivering exceptional TCO with over 51% data center energy cost savings.Designed to Scale

Designed with full scalability in mind, supporting 8 high-speed 400G networking cards providing direct connectivity to each GPU for massive AI clusters.

Massive-Scale AI Training and Inference

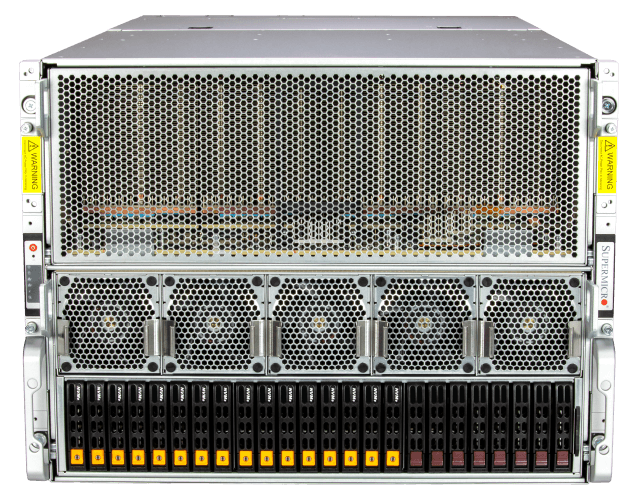

8U 8-GPU System with AMD Instinct MI300X Accelerators

AS -8125GS-TNMR2

Fully optimized for the industry-standard OCP Accelerated Module (OAM) form factor, this system provides unparalleled flexibility for rapidly-evolving AI infrastructure requirements and simplifies deployment at scale. A massive pool of 1.5 TB HBM3 per server node erases AI training bottlenecks by containing even extremely-large LLMs within its physical GPU memory, minimizing training time and maximizing the number of concurrent inference instances per node. Designed with full scalability in mind, the system supports 8 high-speed 400G networking cards providing direct connectivity to each GPU for massive AI training clusters.

Enterprise HPC and Supercomputing

Liquid-Cooled 2U Quad-APU System with AMD Instinct MI300A Accelerators

AS -2145GH-TNMR

Targeting accelerated HPC workloads, this 2U 4-way multi-APU system with liquid cooling integrates 4 AMD Instinct™ MI300A accelerators. Each APU combines high-performance AMD CPU, GPU and HBM3 memory for a total of 912 AMD CDNA™ 3 GPU compute units and 96 “Zen 4” cores in one system. Supermicro's direct-to-chip custom liquid-cooling technology enables exceptional TCO with over 51% data center energy cost savings. Furthermore, there is a 70% reduction in fans compared to air-cooled solutions. The rack-scale integration optimized with the dual AIOM and 400G networking creates a high-density supercomputing cluster with up to 21 2U systems in a 48U rack.

Converged HPC-AI and Scientific Computing

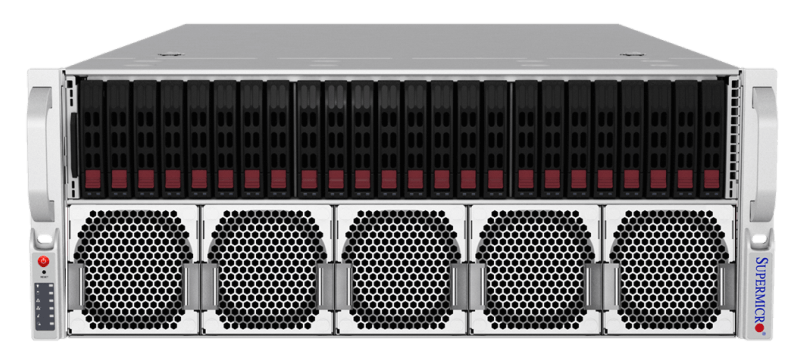

Air-Cooled 4U Quad-APU System with AMD Instinct MI300A Accelerators

AS -4145GH-TNMR

The Supermicro AS -4145GH-TNMR is a 4U 4-way multi-APU system with air cooling for flexible deployment options. It features a balanced CPU-to-GPU ratio for taking on converged HPC-AI applications and is well equipped for a variety of data type precisions. In addition, a total of 912 AMD CDNA™ 3 GPU compute units, 96 “Zen 4” cores, and 512 GB of unified HBM3 memory deliver substantial performance for parallelizable workloads. The mechanical airflow design of this system keeps thermal throttling at bay in addition to 10x heavy-duty 80mm fans.